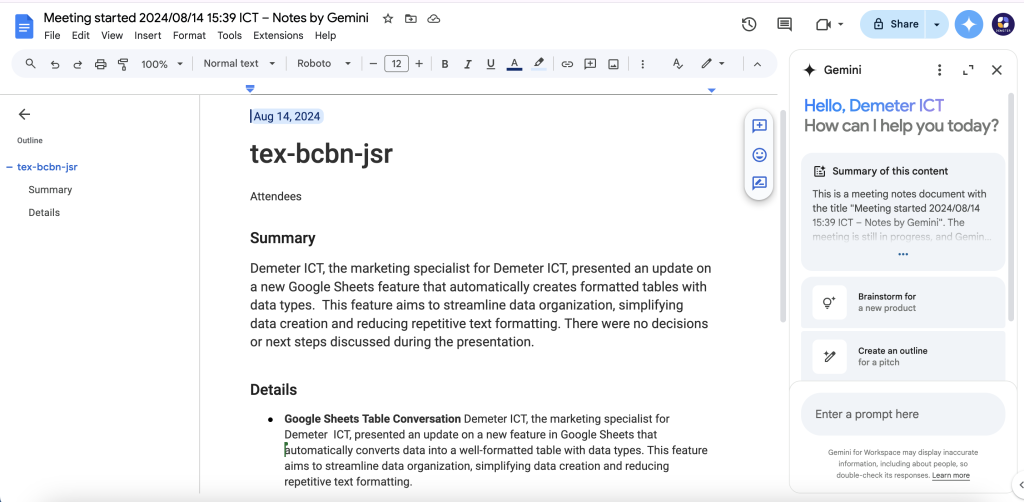

Face recognition technology

Overview

This document is describe about open source of face recognition demo using machine learning. Built using dlib‘s state-of-the-art face recognition built with deep learning. The model has an accuracy of 99.38% on the Labeled Faces in the Wild benchmark.

System Requirements

Note: use GPU have compute capability >= 5.0

Installation options and Usage

How face recognition works

Face recognition is really a series of several related problems:

- First, look at a picture and find all the faces in it

- Second, focus on each face and be able to understand that even if a face is turned in a weird direction or in bad lighting, it is still the same person.

- Third, be able to pick out unique features of the face that you can use to tell it apart from other people— like how big the eyes are, how long the face is, etc.

- Finally, compare the unique features of that face to all the people you already know to determine the person’s name.

Face recognition – step by step

Let’s tackle this problem one step at a time. For each step, we’ll learn about a different machine learning algorithm. I’m not going to explain every single algorithm completely to keep this from turning into a book, but you’ll learn the main ideas behind each one and you’ll learn how you can build your own facial recognition system in Python using OpenFace and dlib.

Step 1: Finding all the faces

- Use a method invented in 2005 called Histogram of Oriented Gradients (HOG).

- To find faces in this HOG image, all we have to do is find the part of our image that looks the most similar to a known HOG pattern that was extracted from a bunch of other training faces.

Step 2: Posing and projecting Faces

- We are going to use an algorithm called face landmark estimation.

- The basic idea is we will come up with 68 specific points (called landmarks) that exist on every face — the top of the chin, the outside edge of each eye, the inner edge of each

eyebrow, etc. Then we will train a machine learning algorithm to be able to find these 68 specific points on any face:

Step 3: Encoding faces

- Use Triplet neural network

- Model

3. Triplet loss

Step 4: Finding the person’s name from the encoding

- This last step is find the person in our database of known people who has the closest measurements to our test image.

- Can use KNN, SVM classifier.. embeddeding features (128 measurements for each face).

Document refer

link document: https://medium.com/@ageitgey/machine-learning-is-fun-part-4-modern-face-recognition-with-deep-learning-c3cffc121d78

link source code: https://github.com/ageitgey/face_recognition#face-recognition

https://medium.com/@ageitgey/machine-learning-is-fun-part-4-modern-face-recognition-with-deep-learning-c3cffc121d78

https://medium.com/@ageitgey/machine-learning-is-fun-part-4-modern-face-recognition-with-deep-learning-c3cffc121d78

You need to login in order to like this post: click here

May 05, 2025

May 05, 2025